1 Linear Equations in Linear Algebra

1.1 Systems of Linear Equations

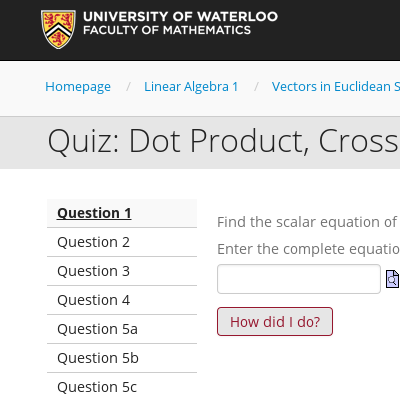

A 3x3 system having a unique solution is solved by putting the augmented matrix in reduced row echelon form. A picture of three intersecting planes provides geometric intuition.

Example of solving a 3-by-3 system of linear equations by row-reducing the augmented matrix, in the case of one solution math.la.e.linsys.3x3.soln.row_reduce.o

Definition of matrix in reduced row echelon form math.la.d.mat.rref

Linear systems have zero, one, or infinitely many solutions. math.la.t.linsys.zoi

Definition of consistent linear system math.la.d.linsys.consistent

- Created On

- February 15th, 2017

- 7 years ago

- Views

- 3

- Type

- Video

- Timeframe

- Review

- Perspective

- Example

- Language

- English

- Content Type

- text/html; charset=utf-8

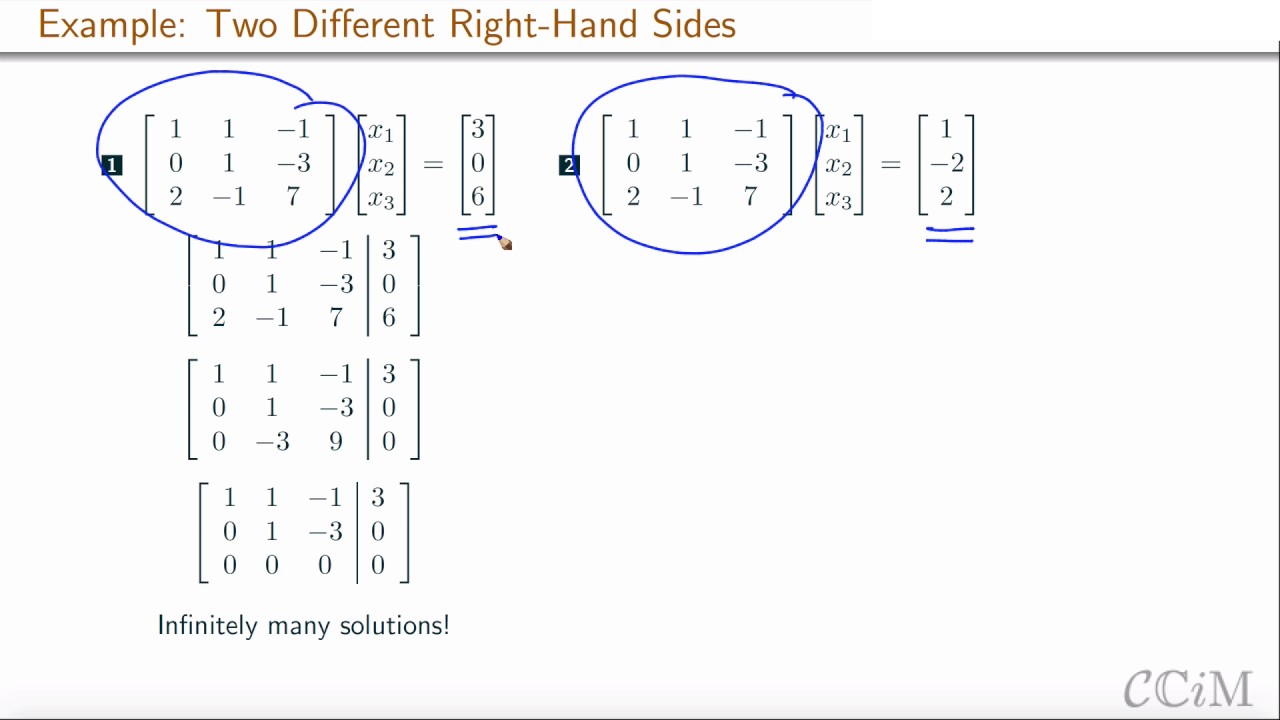

A 3x3 matrix equation Ax=b is solved for two different values of b. In one case there is no solution, and in another there are infinitely many solutions. These examples illustrate a theorem about linear combinations of the columns of the matrix A.

Example of solving a 3-by-3 system of linear equations by row-reducing the augmented matrix, in the case of no solutions math.la.e.linsys.3x3.soln.row_reduce.z

Example of solving a 3-by-3 system of linear equations by row-reducing the augmented matrix, in the case of infinitely many solutions math.la.e.linsys.3x3.soln.row_reduce.i

The matrix equation Ax=b has a solution if and only if b is a linear combination of the columns of A. math.la.t.mat.eqn.lincomb

Definition of matrix equation math.la.d.mat.eqn

- Created On

- February 15th, 2017

- 7 years ago

- Views

- 3

- Type

- Video

- Timeframe

- Pre-class

- Perspective

- Example

- Language

- English

- Content Type

- text/html; charset=utf-8

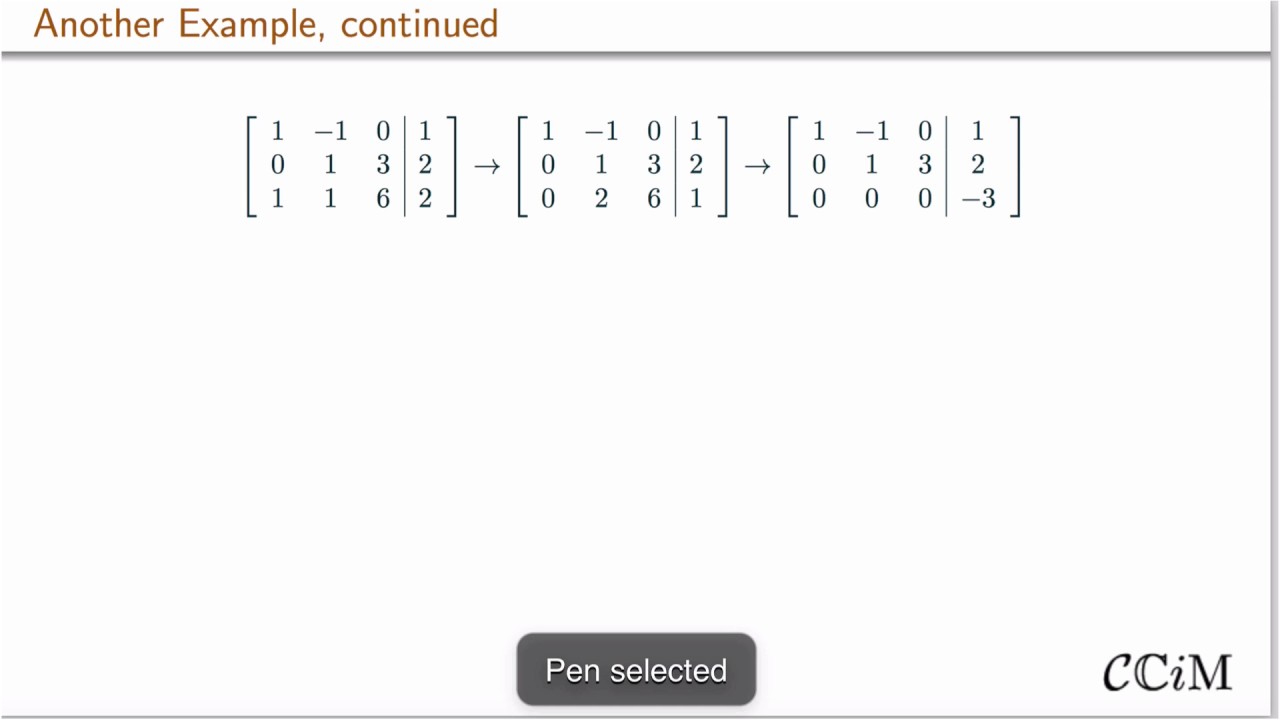

The reduced row echelon form is used to determine when a 3x3 system is inconsistent. A picture of planes in 3-dimensional space is used to provide geometric intuition.

Example of solving a 3-by-3 system of linear equations by row-reducing the augmented matrix, in the case of no solutions math.la.e.linsys.3x3.soln.row_reduce.z

- Created On

- February 15th, 2017

- 7 years ago

- Views

- 4

- Type

- Video

- Timeframe

- Review

- Perspective

- Example

- Language

- English

- Content Type

- text/html; charset=utf-8

Sample problems to help understand when a linear system has 0, 1, or infinitely many solutions.

Linear systems have zero, one, or infinitely many solutions. math.la.t.linsys.zoi

math.la.t.rref.consistent

- Created On

- February 15th, 2017

- 7 years ago

- Views

- 2

- Type

- Handout

- Timeframe

- In-class

- Perspective

- Example

- Language

- English

- Content Type

- text/html; charset=utf-8

Notation for matrix entries, diagonal matrix, square matrix, identity matrix, and zero matrix.

Notation for entry of matrix math.la.d.mat.entry

Definition of m by n matrix math.la.d.mat.m_by_n

Definition of diagonal matrix math.la.d.mat.diagonal

Definition of identity matrix math.la.d.mat.identity

Definition of zero matrix math.la.d.mat.zero

- Created On

- February 17th, 2017

- 7 years ago

- Views

- 3

- Type

- Video

- Timeframe

- Pre-class

- Perspective

- Introduction

- Language

- English

- Content Type

- text/html; charset=utf-8

Equivalence of systems of linear equations, row operations, corresponding matrices representing the linear systems

Definition of equivalent systems of linear equations math.la.d.linsys.equiv

Definition of row operations on a matrix math.la.d.mat.row_op

Definition of row equivalent matrices math.la.d.mat.row_equiv

Row equivalent matrices represent equivalent linear systems math.la.t.mat.row_equiv.linsys

Example of putting a matrix in echelon form math.la.e.mat.echelon.of

- Created On

- August 21st, 2017

- 7 years ago

- Views

- 2

- Type

- Video

- Language

- English

- Content Type

- text/html; charset=utf-8

How to compute all solutions to a general system $Ax=b$ of linear equations and connection to the corresponding homogeneous system $Ax=0$. Visualization of the geometry of solution sets. Consistent systems and their solution using row reduction.

Example of solving a 3-by-3 homogeneous system of linear equations by row-reducing the augmented matrix, in the case of infinitely many solutions math.la.e.linsys.3x3.soln.homog.row_reduce.i

Definition of homogeneous linear system of equations math.la.d.linsys.homog

A homogeneous system has a nontrivial solution if and only if it has a free variable. math.la.t.linsys.homog.nontrivial

Parametric vector form of the solution set of a system of linear equations math.la.c.linsys.soln_set.vec

Definition of trivial solution to a homogeneous linear system of equations math.la.d.linsys.homog.trivial

Definition of nontrivial solution to a homogeneous linear system of equations math.la.d.linsys.homog.nontrivial

- Created On

- August 22nd, 2017

- 7 years ago

- Views

- 3

- Type

- Video

- Language

- English

- Content Type

- text/html; charset=utf-8

Homogeneous systems of linear equations; trivial versus nontrivial solutions of homogeneous systems; how to find nontrivial solutions; how to know from the reduced row-echelon form of a matrix whether the corresponding homogeneous system has nontrivial solutions.

Definition of homogeneous linear system of equations math.la.d.linsys.homog

Definition of trivial solution to a homogeneous linear system of equations math.la.d.linsys.homog.trivial

Definition of nontrivial solution to a homogeneous linear system of equations math.la.d.linsys.homog.nontrivial

A homogeneous system has a nontrivial solution if and only if it has a free variable. math.la.t.linsys.homog.nontrivial

Example of solving a 3-by-3 homogeneous system of linear equations by row-reducing the augmented matrix, in the case of infinitely many solutions math.la.e.linsys.3x3.soln.homog.row_reduce.i

- Created On

- August 25th, 2017

- 7 years ago

- Views

- 4

- Type

- Video

- Language

- English

- Content Type

- text/html; charset=utf-8

We will motivate our study of linear algebra by considering the problem of solving several linear equations simultaneously. The word solve tends to get abused somewhat, as in “solve this problem.” When talking about equations we understand a more precise meaning: find all of the values of some variable quantities that make an equation, or several equations, simultaneously true.

Definition of system of linear equations math.la.d.linsys

- License

- GFDL-1.2

- Submitted At

- September 11th, 2017

- 7 years ago

- Views

- 3

- Type

- Textbook

- Language

- English

- Content Type

- text/html

We will motivate our study of linear algebra by considering the problem of solving several linear equations simultaneously. The word solve tends to get abused somewhat, as in “solve this problem.” When talking about equations we understand a more precise meaning: find all of the values of some variable quantities that make an equation, or several equations, simultaneously true.

Definition of solution set of a system of linear equations math.la.d.linsys.soln_set

- License

- GFDL-1.2

- Submitted At

- September 11th, 2017

- 7 years ago

- Views

- 2

- Type

- Textbook

- Language

- English

- Content Type

- text/html

We begin our study of linear algebra with an introduction and a motivational example.

Definition of linear equation math.la.d.lineqn

- License

- GFDL-1.2

- Submitted At

- September 11th, 2017

- 7 years ago

- Views

- 2

- Type

- Textbook

- Language

- English

- Content Type

- text/html

We will motivate our study of linear algebra by considering the problem of solving several linear equations simultaneously. The word solve tends to get abused somewhat, as in “solve this problem.” When talking about equations we understand a more precise meaning: find all of the values of some variable quantities that make an equation, or several equations, simultaneously true.

Definition of solution to a system of linear equations math.la.d.linsys.soln

- License

- GFDL-1.2

- Submitted At

- September 11th, 2017

- 7 years ago

- Views

- 2

- Type

- Textbook

- Language

- English

- Content Type

- text/html

We will motivate our study of linear algebra by considering the problem of solving several linear equations simultaneously. The word solve tends to get abused somewhat, as in “solve this problem.” When talking about equations we understand a more precise meaning: find all of the values of some variable quantities that make an equation, or several equations, simultaneously true.

Definition of equivalent systems of linear equations math.la.d.linsys.equiv

- License

- GFDL-1.2

- Submitted At

- September 11th, 2017

- 7 years ago

- Views

- 3

- Type

- Textbook

- Language

- English

- Content Type

- text/html

After solving a few systems of equations, you will recognize that it does not matter so much what we call our variables, as opposed to what numbers act as their coefficients. A system in the variables \(x_1,\,x_2,\,x_3\) would behave the same if we changed the names of the variables to \(a,\,b,\,c\) and kept all the constants the same and in the same places. In this section, we will isolate the key bits of information about a system of equations into something called a matrix, and then use this matrix to systematically solve the equations. Along the way we will obtain one of our most important and useful computational tools.

Definition of matrix math.la.d.mat

Definition of m by n matrix math.la.d.mat.m_by_n

math.la.c.mat.entry

- License

- GFDL-1.2

- Submitted At

- September 11th, 2017

- 7 years ago

- Views

- 3

- Type

- Textbook

- Language

- English

- Content Type

- text/html

After solving a few systems of equations, you will recognize that it does not matter so much what we call our variables, as opposed to what numbers act as their coefficients. A system in the variables \(x_1,\,x_2,\,x_3\) would behave the same if we changed the names of the variables to \(a,\,b,\,c\) and kept all the constants the same and in the same places. In this section, we will isolate the key bits of information about a system of equations into something called a matrix, and then use this matrix to systematically solve the equations. Along the way we will obtain one of our most important and useful computational tools.

Definition of row operations on a matrix math.la.d.mat.row_op

- License

- GFDL-1.2

- Submitted At

- September 11th, 2017

- 7 years ago

- Views

- 2

- Type

- Textbook

- Language

- English

- Content Type

- text/html

After solving a few systems of equations, you will recognize that it does not matter so much what we call our variables, as opposed to what numbers act as their coefficients. A system in the variables \(x_1,\,x_2,\,x_3\) would behave the same if we changed the names of the variables to \(a,\,b,\,c\) and kept all the constants the same and in the same places. In this section, we will isolate the key bits of information about a system of equations into something called a matrix, and then use this matrix to systematically solve the equations. Along the way we will obtain one of our most important and useful computational tools.

Definition of coefficient matrix of a linear system math.la.d.mat.coeff

- License

- GFDL-1.2

- Submitted At

- September 11th, 2017

- 7 years ago

- Views

- 3

- Type

- Textbook

- Language

- English

- Content Type

- text/html

After solving a few systems of equations, you will recognize that it does not matter so much what we call our variables, as opposed to what numbers act as their coefficients. A system in the variables \(x_1,\,x_2,\,x_3\) would behave the same if we changed the names of the variables to \(a,\,b,\,c\) and kept all the constants the same and in the same places. In this section, we will isolate the key bits of information about a system of equations into something called a matrix, and then use this matrix to systematically solve the equations. Along the way we will obtain one of our most important and useful computational tools.

Definition of augmented matrix of a linear system math.la.d.mat.augmented

- License

- GFDL-1.2

- Submitted At

- September 11th, 2017

- 7 years ago

- Views

- 3

- Type

- Textbook

- Language

- English

- Content Type

- text/html

After solving a few systems of equations, you will recognize that it does not matter so much what we call our variables, as opposed to what numbers act as their coefficients. A system in the variables \(x_1,\,x_2,\,x_3\) would behave the same if we changed the names of the variables to \(a,\,b,\,c\) and kept all the constants the same and in the same places. In this section, we will isolate the key bits of information about a system of equations into something called a matrix, and then use this matrix to systematically solve the equations. Along the way we will obtain one of our most important and useful computational tools.

Definition of row equivalent matrices math.la.d.mat.row_equiv

- License

- GFDL-1.2

- Submitted At

- September 11th, 2017

- 7 years ago

- Views

- 2

- Type

- Textbook

- Language

- English

- Content Type

- text/html

We will now be more careful about analyzing the reduced row-echelon form derived from the augmented matrix of a system of linear equations. In particular, we will see how to systematically handle the situation when we have infinitely many solutions to a system, and we will prove that every system of linear equations has either zero, one or infinitely many solutions. With these tools, we will be able to routinely solve any linear system.

Linear systems have zero, one, or infinitely many solutions. math.la.t.linsys.zoi

- License

- GFDL-1.2

- Submitted At

- September 11th, 2017

- 7 years ago

- Views

- 3

- Type

- Textbook

- Language

- English

- Content Type

- text/html

We will now be more careful about analyzing the reduced row-echelon form derived from the augmented matrix of a system of linear equations. In particular, we will see how to systematically handle the situation when we have infinitely many solutions to a system, and we will prove that every system of linear equations has either zero, one or infinitely many solutions. With these tools, we will be able to routinely solve any linear system.

Definition of consistent linear system math.la.d.linsys.consistent

Definition of inconsistent linear system math.la.d.linsys.inconsistent

- License

- GFDL-1.2

- Submitted At

- September 11th, 2017

- 7 years ago

- Views

- 3

- Type

- Textbook

- Language

- English

- Content Type

- text/html

After solving a few systems of equations, you will recognize that it does not matter so much what we call our variables, as opposed to what numbers act as their coefficients. A system in the variables \(x_1,\,x_2,\,x_3\) would behave the same if we changed the names of the variables to \(a,\,b,\,c\) and kept all the constants the same and in the same places. In this section, we will isolate the key bits of information about a system of equations into something called a matrix, and then use this matrix to systematically solve the equations. Along the way we will obtain one of our most important and useful computational tools.

Row equivalent matrices represent equivalent linear systems math.la.t.mat.row_equiv.linsys

- License

- GFDL-1.2

- Submitted At

- September 11th, 2017

- 7 years ago

- Views

- 3

- Type

- Textbook

- Language

- English

- Content Type

- text/html

Definition of coefficients of a linear equation math.la.d.lineqn.coeff

Definition of size of a matrix math.la.d.mat.size

Example of solving a 3-by-3 homogeneous system of linear equations by row-reducing the augmented matrix, in the case of one solution math.la.e.linsys.3x3.soln.homog.row_reduce.o

1.2 Row Reduction and Echelon Forms

A 3x3 system having a unique solution is solved by putting the augmented matrix in reduced row echelon form. A picture of three intersecting planes provides geometric intuition.

Example of solving a 3-by-3 system of linear equations by row-reducing the augmented matrix, in the case of one solution math.la.e.linsys.3x3.soln.row_reduce.o

Definition of matrix in reduced row echelon form math.la.d.mat.rref

Linear systems have zero, one, or infinitely many solutions. math.la.t.linsys.zoi

Definition of consistent linear system math.la.d.linsys.consistent

- Created On

- February 15th, 2017

- 7 years ago

- Views

- 3

- Type

- Video

- Timeframe

- Review

- Perspective

- Example

- Language

- English

- Content Type

- text/html; charset=utf-8

Equivalence of systems of linear equations, row operations, corresponding matrices representing the linear systems

Definition of equivalent systems of linear equations math.la.d.linsys.equiv

Definition of row operations on a matrix math.la.d.mat.row_op

Definition of row equivalent matrices math.la.d.mat.row_equiv

Row equivalent matrices represent equivalent linear systems math.la.t.mat.row_equiv.linsys

Example of putting a matrix in echelon form math.la.e.mat.echelon.of

- Created On

- August 21st, 2017

- 7 years ago

- Views

- 2

- Type

- Video

- Language

- English

- Content Type

- text/html; charset=utf-8

Definition of echelon form, reduction of a matrix to echelon form in order to compute solutions to systems of linear equations; definition of reduced row echelon form

Definition of (row) echelon form of a matrix math.la.d.mat.echelon.of

Example of (echelon matrix/matrix in (row) echelon form) math.la.e.mat.echelon

Definition of matrix in reduced row echelon form math.la.d.mat.rref

Definition of reduced row echelon form of a matrix math.la.d.mat.rref.of

Definition of leading entry in a row of a matrix math.la.d.mat.row.leading

Definition of (echelon matrix/matrix in (row) echelon form) math.la.d.mat.echelon

Example of putting a matrix in echelon form math.la.e.mat.echelon.of

- Created On

- August 25th, 2017

- 7 years ago

- Views

- 2

- Type

- Video

- Language

- English

- Content Type

- text/html; charset=utf-8

After solving a few systems of equations, you will recognize that it does not matter so much what we call our variables, as opposed to what numbers act as their coefficients. A system in the variables \(x_1,\,x_2,\,x_3\) would behave the same if we changed the names of the variables to \(a,\,b,\,c\) and kept all the constants the same and in the same places. In this section, we will isolate the key bits of information about a system of equations into something called a matrix, and then use this matrix to systematically solve the equations. Along the way we will obtain one of our most important and useful computational tools.

Every matrix is row-equivalent to a matrix in reduced row echelon form. math.la.t.mat.rref.exists

- License

- GFDL-1.2

- Submitted At

- September 11th, 2017

- 7 years ago

- Views

- 3

- Type

- Textbook

- Language

- English

- Content Type

- text/html

After solving a few systems of equations, you will recognize that it does not matter so much what we call our variables, as opposed to what numbers act as their coefficients. A system in the variables \(x_1,\,x_2,\,x_3\) would behave the same if we changed the names of the variables to \(a,\,b,\,c\) and kept all the constants the same and in the same places. In this section, we will isolate the key bits of information about a system of equations into something called a matrix, and then use this matrix to systematically solve the equations. Along the way we will obtain one of our most important and useful computational tools.

Every matrix is row-equivalent to only one matrix in reduced row echelon form. math.la.t.mat.rref.unique

- License

- GFDL-1.2

- Submitted At

- September 11th, 2017

- 7 years ago

- Views

- 2

- Type

- Textbook

- Language

- English

- Content Type

- text/html

After solving a few systems of equations, you will recognize that it does not matter so much what we call our variables, as opposed to what numbers act as their coefficients. A system in the variables \(x_1,\,x_2,\,x_3\) would behave the same if we changed the names of the variables to \(a,\,b,\,c\) and kept all the constants the same and in the same places. In this section, we will isolate the key bits of information about a system of equations into something called a matrix, and then use this matrix to systematically solve the equations. Along the way we will obtain one of our most important and useful computational tools.

Definition of matrix in reduced row echelon form math.la.d.mat.rref

Definition of pivot column math.la.d.mat.pivot_col

Definition of leading entry in a row of a matrix math.la.d.mat.row.leading

- License

- GFDL-1.2

- Submitted At

- September 11th, 2017

- 7 years ago

- Views

- 3

- Type

- Textbook

- Language

- English

- Content Type

- text/html

Definition of row reduce a matrix math.la.d.mat.row_reduce

Definition of pivot position math.la.d.mat.pivot_position

Example of putting a matrix in echelon form and identifying the pivot columns math.la.e.mat.echelon.of.pivot

math.la.e.mat.echelon.of.4x4

Definition of pivot math.la.d.mat.pivot

math.la.d.insys.variable.basic

math.la.d.insys.variable.free

math.la.e.linsys.3x5.soln.row_reduce.i

The echelon form can be used to determine if a linear system is consistent. math.la.t.echelon.consistent

Example of using the echelon form to determine if a linear system is consistent. math.la.e.echelon.consistent

1.3 Vector Equations

The product of a matrix times a vector is defined, and used to show that a system of linear equations is equivalent to a system of linear equations involving matrices and vectors. The example uses a 2x3 system.

Definition of matrix-scalar multiplication math.la.d.mat.scalar.mult

A linear system is equivalent to a vector equation. math.la.t.linsys.vec

A matrix equation is equivalent to a linear system math.la.t.mat.eqn.linsys

- License

- CC-BY-SA-4.0

- Created On

- February 15th, 2017

- 7 years ago

- Views

- 3

- Type

- Video

- Timeframe

- Pre-class

- Perspective

- Introduction

- Language

- English

- Content Type

- text/html; charset=utf-8

Definition of vector, equality of vectors, vector addition, and scalar vector multiplication. Geometric and algebraic properties of vector addition are discussed. (need a topic on vector addition is commutative and associative)

Definition of column vector, coordinate vector space math.la.d.vec.col.coord

Definition of vector, coordinate vector space math.la.d.vec.coord

Definition of equality of vectors, coordinate vector space math.la.d.vec.equal.coord

Definition of vector sum/addition, coordinate vector space math.la.d.vec.sum.coord

Definition of vector-scalar multiplication, coordinate vector space math.la.d.vec.scalar.mult.coord

math.la.t.vec.sum.geometric.RnCn

- Created On

- February 19th, 2017

- 7 years ago

- Views

- 2

- Type

- Video

- Timeframe

- Pre-class

- Perspective

- Introduction

- Language

- English

- Content Type

- text/html; charset=utf-8

The linear combination of a set of vectors is defined. Determine if a vector in R^2 is in the span of two other vectors. The span of a set of vectors is related to the columns of a matrix. (need topic: Determine if a vector in R^2 is in the span of two other vectors.)

Definition of linear combination of vectors, coordinate vector space math.la.d.vec.lincomb.coord

A linear system is equivalent to a vector equation. math.la.t.linsys.vec

The matrix equation Ax=b has a solution if and only if b is a linear combination of the columns of A. math.la.t.mat.eqn.lincomb

- Created On

- February 20th, 2017

- 7 years ago

- Views

- 2

- Type

- Video

- Timeframe

- Pre-class

- Perspective

- Introduction

- Language

- English

- Content Type

- text/html; charset=utf-8

Definition of the span of a set of vectors. Example of checking if a vector in R^3 is in the span of a set of two vectors. Geometric picture of a span.

Determine if a particular vector is in the span of a set of vectors in R^3 math.la.e.vec.span.of.r3

Definition of linear combination of vectors, coordinate vector space math.la.d.vec.lincomb.coord

Definition of span of a set of vectors, coordinate vector space math.la.d.vec.span.coord

Geometric description of span of a set of vectors in R^n (or C^n) math.la.c.vec.span.geometric.rncn

- Created On

- February 20th, 2017

- 7 years ago

- Views

- 3

- Type

- Video

- Timeframe

- Pre-class

- Perspective

- Introduction

- Language

- English

- Content Type

- text/html; charset=utf-8

Suggestions for in-class activities on linear combination and span of vectors in R^n. (need a topic for the general *process* of determining if a vector is in the span of a set of devtors)

Definition of linear combination of vectors, coordinate vector space math.la.d.vec.lincomb.coord

Definition of span of a set of vectors, coordinate vector space math.la.d.vec.span.coord

A linear system is equivalent to a vector equation. math.la.t.linsys.vec

- Created On

- February 20th, 2017

- 7 years ago

- Views

- 2

- Type

- Handout

- Timeframe

- In-class

- Language

- English

- Content Type

- text/html; charset=utf-8

In-class activity for linear combinations and span.

Geometric description of span of a set of vectors in R^n (or C^n) math.la.c.vec.span.geometric.rncn

Definition of span of a set of vectors, coordinate vector space math.la.d.vec.span.coord

Determine if a particular vector is in the span of a set of vectors in R^3 math.la.e.vec.span.of.r3

Definition of linear combination of vectors, coordinate vector space math.la.d.vec.lincomb.coord

- License

- GFDL-1.3

- Created On

- June 8th, 2017

- 7 years ago

- Views

- 3

- Type

- Handout

- Timeframe

- In-class

- Perspective

- Introduction

- Language

- English

- Content Type

- application/pdf

University of Waterloo Math Online -

Definition of vector, coordinate vector space math.la.d.vec.coord

Definition of column vector, coordinate vector space math.la.d.vec.col.coord

Definition of equality of vectors, coordinate vector space math.la.d.vec.equal.coord

Definition of vector sum/addition, coordinate vector space math.la.d.vec.sum.coord

Definition of vector-scalar multiplication, coordinate vector space math.la.d.vec.scalar.mult.coord

Definition of scalar, coordinate vector space math.la.d.scalar

Example of a sum of vectors interpreted geometrically in R^2 math.la.e.vec.sum.geometric.r2

Example of vector-scalar multiplication in R^2 math.la.e.vec.scalar.mult.r2

Definition of linear combination of vectors, coordinate vector space math.la.d.vec.lincomb.coord

- Created On

- October 23rd, 2013

- 11 years ago

- Views

- 2

- Type

- Video

- Perspective

- Introduction

- Language

- English

- Content Type

- text/html;charset=UTF-8

Slides for the accompanying video from University of Waterloo.

Definition of column vector, coordinate vector space math.la.d.vec.col.coord

Definition of vector, coordinate vector space math.la.d.vec.coord

Definition of equality of vectors, coordinate vector space math.la.d.vec.equal.coord

Definition of vector sum/addition, coordinate vector space math.la.d.vec.sum.coord

Definition of vector-scalar multiplication, coordinate vector space math.la.d.vec.scalar.mult.coord

Definition of scalar, coordinate vector space math.la.d.scalar

- Created On

- October 23rd, 2013

- 11 years ago

- Views

- 3

- Type

- Handout

- Perspective

- Introduction

- Language

- English

- Content Type

- application/pdf

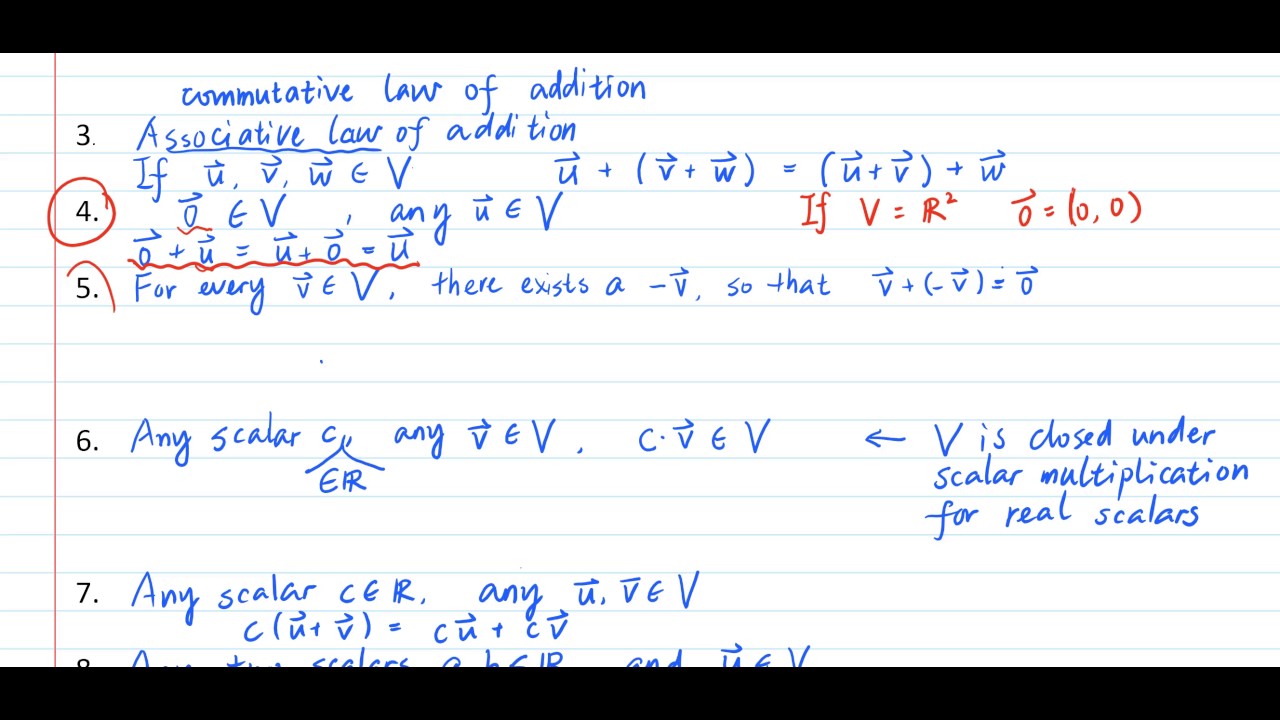

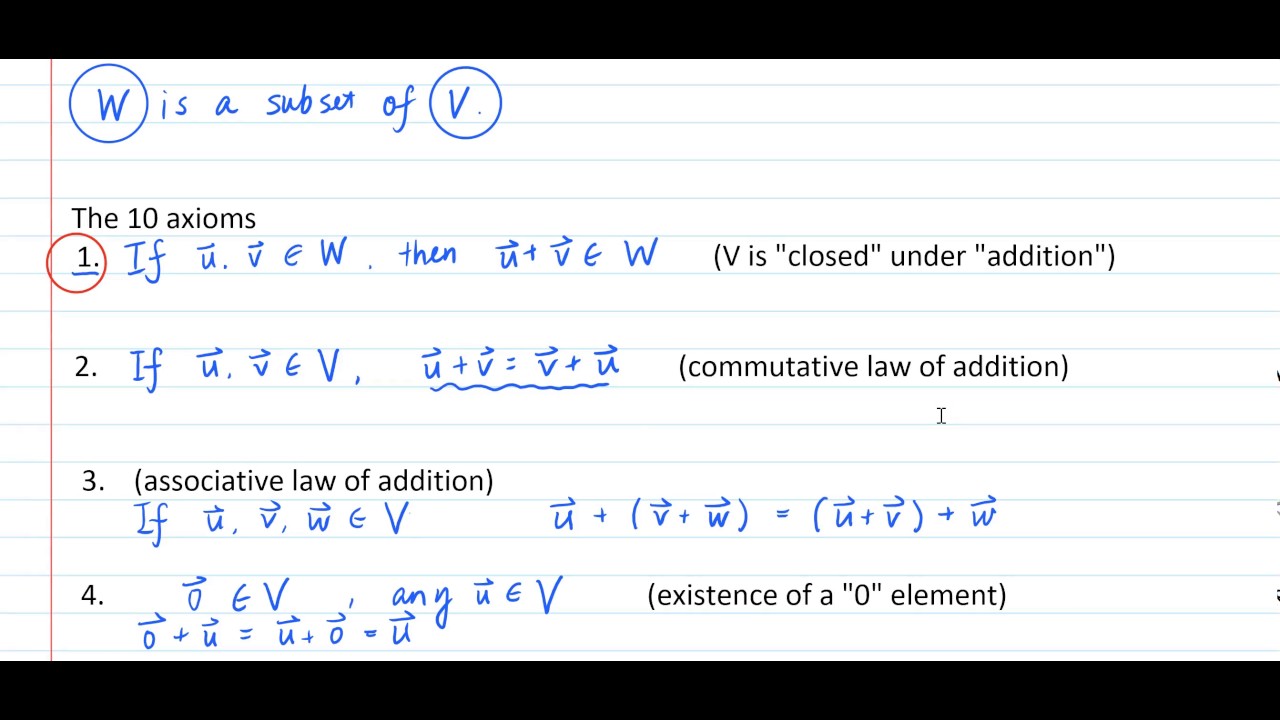

From the University of Waterloo Math Online

The vector space properties of R^n (or C^n) math.la.t.vec.axioms.rncn

Definition of span of a set of vectors, coordinate vector space math.la.d.vec.span.coord

Geometric description of span of a set of vectors in R^n (or C^n) math.la.c.vec.span.geometric.rncn

- Created On

- October 23rd, 2013

- 11 years ago

- Views

- 3

- Type

- Video

- Perspective

- Introduction

- Language

- English

- Content Type

- text/html;charset=UTF-8

Slides from the corresponding video from the University of Waterloo.

Definition of span of a set of vectors, coordinate vector space math.la.d.vec.span.coord

Geometric description of span of a set of vectors in R^n (or C^n) math.la.c.vec.span.geometric.rncn

- Created On

- October 23rd, 2013

- 11 years ago

- Views

- 3

- Type

- Handout

- Perspective

- Introduction

- Language

- English

- Content Type

- application/pdf

Quiz from the University of Waterloo.

Definition of vector-scalar multiplication, coordinate vector space math.la.d.vec.scalar.mult.coord

Definition of vector sum/addition, coordinate vector space math.la.d.vec.sum.coord

Example of vector-scalar multiplication in R^2 math.la.e.vec.scalar.mult.r2

Example of linear combination of vectors in R^2 math.la.e.vec.lincomb.r2

A linear system is equivalent to a vector equation. math.la.t.linsys.vec

Determine if a particular vector is in the span of a set of vectors in R^3 math.la.e.vec.span.of.r3

Definition of linearly independent set of vectors: if a linear combination is zero, then every coefficient is zero, coordinate vector space. math.la.d.vec.linindep.coord

Determine if a particular set of vectors in R^3 in linearly independent math.la.e.vec.linindep.r3

Definition of linear dependence relation math.la.d.vec.lindep.relation

A set of two vectors is linearly dependent if and only if neither is a scalar multiple of the other. math.la.t.vec.lindep.two

Theorem: a set of vectors is linearly dependent if and only if one of the vectors can be written as a linear combination of the other vectors, coordinate vector space. math.la.t.vec.lindep.coord

- Created On

- October 23rd, 2013

- 11 years ago

- Views

- 4

- Type

- Unknown

- Timeframe

- Post-class

- Perspective

- Example

- Language

- English

- Content Type

- text/html;charset=UTF-8

Definition of a vector; vector addition; scalar multiplication; visualization in R^2 and R^3; vector space axioms; linear combinations; span.

Definition of linear combination of vectors, arbitrary vector space math.la.d.vec.lincomb.arb

Example of linear combination of vectors in R^2 math.la.e.vec.lincomb.r2

Example of writing a given vector in R^3 as a linear combination of given vectors math.la.e.vec.lincomb.weight.solve.r3

- Created On

- September 3rd, 2017

- 7 years ago

- Views

- 2

- Type

- Video

- Language

- English

- Content Type

- text/html; charset=utf-8

After solving a few systems of equations, you will recognize that it does not matter so much what we call our variables, as opposed to what numbers act as their coefficients. A system in the variables \(x_1,\,x_2,\,x_3\) would behave the same if we changed the names of the variables to \(a,\,b,\,c\) and kept all the constants the same and in the same places. In this section, we will isolate the key bits of information about a system of equations into something called a matrix, and then use this matrix to systematically solve the equations. Along the way we will obtain one of our most important and useful computational tools.

Definition of column vector, coordinate vector space math.la.d.vec.col.coord

Definition of size of a vector, coordinate vector space math.la.d.vec.size.coord

Definition of vector, coordinate vector space math.la.d.vec.coord

Definition of entry/component of a vector, coordinate vector space math.la.d.vec.component.coord

- License

- GFDL-1.2

- Submitted At

- September 11th, 2017

- 7 years ago

- Views

- 2

- Type

- Textbook

- Language

- English

- Content Type

- text/html

In this section we define some new operations involving vectors, and collect some basic properties of these operations. Begin by recalling our definition of a column vector as an ordered list of complex numbers, written vertically (Definition CV). The collection of all possible vectors of a fixed size is a commonly used set, so we start with its definition.

Definition of vector sum/addition, coordinate vector space math.la.d.vec.sum.coord

- License

- GFDL-1.2

- Submitted At

- September 11th, 2017

- 7 years ago

- Views

- 3

- Type

- Textbook

- Language

- English

- Content Type

- text/html

In this section we will provide an extremely compact way to describe an infinite set of vectors, making use of linear combinations. This will give us a convenient way to describe the solution set of a linear system, the null space of a matrix, and many other sets of vectors.

Definition of span of a set of vectors, coordinate vector space math.la.d.vec.span.coord

Definition of span of a set of vectors, coordinate vector space math.la.d.vec.span.coord

- License

- GFDL-1.2

- Submitted At

- September 11th, 2017

- 7 years ago

- Views

- 3

- Type

- Textbook

- Language

- English

- Content Type

- text/html

In this section we define some new operations involving vectors, and collect some basic properties of these operations. Begin by recalling our definition of a column vector as an ordered list of complex numbers, written vertically (Definition CV). The collection of all possible vectors of a fixed size is a commonly used set, so we start with its definition.

Definition of vector-scalar multiplication, coordinate vector space math.la.d.vec.scalar.mult.coord

- License

- GFDL-1.2

- Submitted At

- September 11th, 2017

- 7 years ago

- Views

- 3

- Type

- Textbook

- Language

- English

- Content Type

- text/html

In Section VO we defined vector addition and scalar multiplication. These two operations combine nicely to give us a construction known as a linear combination, a construct that we will work with throughout this course.

Definition of linear combination of vectors, coordinate vector space math.la.d.vec.lincomb.coord

- License

- GFDL-1.2

- Submitted At

- September 11th, 2017

- 7 years ago

- Views

- 2

- Type

- Textbook

- Language

- English

- Content Type

- text/html

In this section we define some new operations involving vectors, and collect some basic properties of these operations. Begin by recalling our definition of a column vector as an ordered list of complex numbers, written vertically (Definition CV). The collection of all possible vectors of a fixed size is a commonly used set, so we start with its definition.

Definition of equality of vectors, coordinate vector space math.la.d.vec.equal.coord

- License

- GFDL-1.2

- Submitted At

- September 11th, 2017

- 7 years ago

- Views

- 3

- Type

- Textbook

- Language

- English

- Content Type

- text/html

Vector sum/addition interpreted geometrically in R^n (or C^n) math.la.t.vec.sum.geometric.rncn

Definition of weights in a linear combination of vectors, coordinate vector space math.la.d.vec.lincomb.weight.coord

1.4 The Matrix Equation Ax = b

The product of a matrix times a vector is defined, and used to show that a system of linear equations is equivalent to a system of linear equations involving matrices and vectors. The example uses a 2x3 system.

Definition of matrix-scalar multiplication math.la.d.mat.scalar.mult

A linear system is equivalent to a vector equation. math.la.t.linsys.vec

A matrix equation is equivalent to a linear system math.la.t.mat.eqn.linsys

- License

- CC-BY-SA-4.0

- Created On

- February 15th, 2017

- 7 years ago

- Views

- 3

- Type

- Video

- Timeframe

- Pre-class

- Perspective

- Introduction

- Language

- English

- Content Type

- text/html; charset=utf-8

Advice to instructors for in-class activities on matrix-vector multiplication and translating between the various equivalent notation forms of linear systems, and suggestions for how this topic can be used to motivate future topics.

Definition of matrix-vector product, as a linear combination of column vectors math.la.d.mat.vec.prod

A matrix equation is equivalent to a linear system math.la.t.mat.eqn.linsys

The matrix equation Ax=b has a solution if and only if b is a linear combination of the columns of A. math.la.t.mat.eqn.lincomb

- Created On

- February 15th, 2017

- 7 years ago

- Views

- 3

- Type

- Handout

- Timeframe

- In-class

- Perspective

- Example

- Language

- English

- Content Type

- text/html; charset=utf-8

A 3x3 matrix equation Ax=b is solved for two different values of b. In one case there is no solution, and in another there are infinitely many solutions. These examples illustrate a theorem about linear combinations of the columns of the matrix A.

Example of solving a 3-by-3 system of linear equations by row-reducing the augmented matrix, in the case of no solutions math.la.e.linsys.3x3.soln.row_reduce.z

Example of solving a 3-by-3 system of linear equations by row-reducing the augmented matrix, in the case of infinitely many solutions math.la.e.linsys.3x3.soln.row_reduce.i

The matrix equation Ax=b has a solution if and only if b is a linear combination of the columns of A. math.la.t.mat.eqn.lincomb

Definition of matrix equation math.la.d.mat.eqn

- Created On

- February 15th, 2017

- 7 years ago

- Views

- 3

- Type

- Video

- Timeframe

- Pre-class

- Perspective

- Example

- Language

- English

- Content Type

- text/html; charset=utf-8

For a specific 3x3 matrix, solve Ax=0 by row reducing an augmented matrix.

Example of solving a 3-by-3 homogeneous matrix equation math.la.e.mat.eqn.3x3.homog.solve

- Created On

- February 15th, 2017

- 7 years ago

- Views

- 3

- Type

- Video

- Timeframe

- Review

- Perspective

- Example

- Language

- English

- Content Type

- text/html; charset=utf-8

Learning goals: 1. What are the dimension (size) requirements for two matrices so that they can be multiplied to each other? 2. What is the product of two matrices, when it exists?

Definition of matrix multiplication in terms of column vectors math.la.d.mat.mult.col

Definition of matrix-vector product, as a linear combination of column vectors math.la.d.mat.vec.prod

- Created On

- February 17th, 2017

- 7 years ago

- Views

- 2

- Type

- Video

- Timeframe

- Review

- Language

- English

- Content Type

- text/html; charset=utf-8

The linear combination of a set of vectors is defined. Determine if a vector in R^2 is in the span of two other vectors. The span of a set of vectors is related to the columns of a matrix. (need topic: Determine if a vector in R^2 is in the span of two other vectors.)

Definition of linear combination of vectors, coordinate vector space math.la.d.vec.lincomb.coord

A linear system is equivalent to a vector equation. math.la.t.linsys.vec

The matrix equation Ax=b has a solution if and only if b is a linear combination of the columns of A. math.la.t.mat.eqn.lincomb

- Created On

- February 20th, 2017

- 7 years ago

- Views

- 2

- Type

- Video

- Timeframe

- Pre-class

- Perspective

- Introduction

- Language

- English

- Content Type

- text/html; charset=utf-8

Equivalent statements for a matrix A: for every right-hand side b, the system Ax=b has a solution; every b is a linear combination of the columns of A; the span of the columns of A is maximal; A has a pivot position in every row.

Equivalence theorem: the equation Ax=b has a solution for all b. math.la.t.equiv.mat.eqn

Equivalence theorem: the columns of A span R^n (or C^n). math.la.t.equiv.col.span

Equivalence theorem: there is a pivot position in every row of A. math.la.t.equiv.row.pivot

The matrix equation Ax=b has a solution if and only if b is a linear combination of the columns of A. math.la.t.mat.eqn.lincomb

- Created On

- August 25th, 2017

- 7 years ago

- Views

- 2

- Type

- Video

- Language

- English

- Content Type

- text/html; charset=utf-8

In Section VO we defined vector addition and scalar multiplication. These two operations combine nicely to give us a construction known as a linear combination, a construct that we will work with throughout this course.

The matrix equation Ax=b has a solution if and only if b is a linear combination of the columns of A. math.la.t.mat.eqn.lincomb

- License

- GFDL-1.2

- Submitted At

- September 11th, 2017

- 7 years ago

- Views

- 3

- Type

- Textbook

- Language

- English

- Content Type

- text/html

We know how to add vectors and how to multiply them by scalars. Together, these operations give us the possibility of making linear combinations. Similarly, we know how to add matrices and how to multiply matrices by scalars. In this section we mix all these ideas together and produce an operation known as matrix multiplication. This will lead to some results that are both surprising and central. We begin with a definition of how to multiply a vector by a matrix.

Definition of matrix-vector product, as a linear combination of column vectors math.la.d.mat.vec.prod

- License

- GFDL-1.2

- Submitted At

- September 11th, 2017

- 7 years ago

- Views

- 2

- Type

- Textbook

- Language

- English

- Content Type

- text/html

A matrix-vector product (Definition MVP) is a linear combination of the columns of the matrix and this allows us to connect matrix multiplication with systems of equations via Theorem SLSLC. Row operations are linear combinations of the rows of a matrix, and of course, reduced row-echelon form (Definition RREF) is also intimately related to solving systems of equations. In this section we will formalize these ideas with two key definitions of sets of vectors derived from a matrix.

The matrix equation Ax=b has a solution if and only if b is a linear combination of the columns of A. math.la.t.mat.eqn.lincomb

- License

- GFDL-1.2

- Submitted At

- September 11th, 2017

- 7 years ago

- Views

- 2

- Type

- Textbook

- Language

- English

- Content Type

- text/html

We know how to add vectors and how to multiply them by scalars. Together, these operations give us the possibility of making linear combinations. Similarly, we know how to add matrices and how to multiply matrices by scalars. In this section we mix all these ideas together and produce an operation known as matrix multiplication. This will lead to some results that are both surprising and central. We begin with a definition of how to multiply a vector by a matrix.

A matrix equation is equivalent to a linear system math.la.t.mat.eqn.linsys

- License

- GFDL-1.2

- Submitted At

- September 11th, 2017

- 7 years ago

- Views

- 3

- Type

- Textbook

- Language

- English

- Content Type

- text/html

We know how to add vectors and how to multiply them by scalars. Together, these operations give us the possibility of making linear combinations. Similarly, we know how to add matrices and how to multiply matrices by scalars. In this section we mix all these ideas together and produce an operation known as matrix multiplication. This will lead to some results that are both surprising and central. We begin with a definition of how to multiply a vector by a matrix.

Matrix-scalar product is commutative math.la.t.mat.scalar.prod.commut

- License

- GFDL-1.2

- Submitted At

- September 11th, 2017

- 7 years ago

- Views

- 3

- Type

- Textbook

- Language

- English

- Content Type

- text/html

A matrix-vector product (Definition MVP) is a linear combination of the columns of the matrix and this allows us to connect matrix multiplication with systems of equations via Theorem SLSLC. Row operations are linear combinations of the rows of a matrix, and of course, reduced row-echelon form (Definition RREF) is also intimately related to solving systems of equations. In this section we will formalize these ideas with two key definitions of sets of vectors derived from a matrix.

Equivalence theorem: the columns of A span R^n (or C^n). math.la.t.equiv.col.span

- License

- GFDL-1.2

- Submitted At

- September 11th, 2017

- 7 years ago

- Views

- 3

- Type

- Textbook

- Language

- English

- Content Type

- text/html

Example of matrix-vector product, as a linear combination of column vectors math.la.e.mat.vec.prod

Example of solving a 3-by-3 matrix equation math.la.e.mat.eqn.3x3.solve

Definition of matrix-vector product, each entry separately math.la.d.mat.vec.prod.coord

Example of matrix-vector product, each entry separately math.la.e.mat.vec.prod.coord

Matrix-vector product is associative math.la.t.mat.vec.prod.assoc

1.5 Solution Sets of Linear Systems

How to compute all solutions to a general system $Ax=b$ of linear equations and connection to the corresponding homogeneous system $Ax=0$. Visualization of the geometry of solution sets. Consistent systems and their solution using row reduction.

Example of solving a 3-by-3 homogeneous system of linear equations by row-reducing the augmented matrix, in the case of infinitely many solutions math.la.e.linsys.3x3.soln.homog.row_reduce.i

Definition of homogeneous linear system of equations math.la.d.linsys.homog

A homogeneous system has a nontrivial solution if and only if it has a free variable. math.la.t.linsys.homog.nontrivial

Parametric vector form of the solution set of a system of linear equations math.la.c.linsys.soln_set.vec

Definition of trivial solution to a homogeneous linear system of equations math.la.d.linsys.homog.trivial

Definition of nontrivial solution to a homogeneous linear system of equations math.la.d.linsys.homog.nontrivial

- Created On

- August 22nd, 2017

- 7 years ago

- Views

- 3

- Type

- Video

- Language

- English

- Content Type

- text/html; charset=utf-8

Homogeneous systems of linear equations; trivial versus nontrivial solutions of homogeneous systems; how to find nontrivial solutions; how to know from the reduced row-echelon form of a matrix whether the corresponding homogeneous system has nontrivial solutions.

Definition of homogeneous linear system of equations math.la.d.linsys.homog

Definition of trivial solution to a homogeneous linear system of equations math.la.d.linsys.homog.trivial

Definition of nontrivial solution to a homogeneous linear system of equations math.la.d.linsys.homog.nontrivial

A homogeneous system has a nontrivial solution if and only if it has a free variable. math.la.t.linsys.homog.nontrivial

Example of solving a 3-by-3 homogeneous system of linear equations by row-reducing the augmented matrix, in the case of infinitely many solutions math.la.e.linsys.3x3.soln.homog.row_reduce.i

- Created On

- August 25th, 2017

- 7 years ago

- Views

- 4

- Type

- Video

- Language

- English

- Content Type

- text/html; charset=utf-8

In this section we specialize to systems of linear equations where every equation has a zero as its constant term. Along the way, we will begin to express more and more ideas in the language of matrices and begin a move away from writing out whole systems of equations. The ideas initiated in this section will carry through the remainder of the course.

Definition of homogeneous linear system of equations math.la.d.linsys.homog

- License

- GFDL-1.2

- Submitted At

- September 11th, 2017

- 7 years ago

- Views

- 2

- Type

- Textbook

- Language

- English

- Content Type

- text/html

In this section we specialize to systems of linear equations where every equation has a zero as its constant term. Along the way, we will begin to express more and more ideas in the language of matrices and begin a move away from writing out whole systems of equations. The ideas initiated in this section will carry through the remainder of the course.

Definition of trivial solution to a homogeneous linear system of equations math.la.d.linsys.homog.trivial

- License

- GFDL-1.2

- Submitted At

- September 11th, 2017

- 7 years ago

- Views

- 2

- Type

- Textbook

- Language

- English

- Content Type

- text/html

math.la.e.linsys.3x1.soln.homog.row_reduce.i

math.la.e.linsys.3x3.soln.row_reduce.i.parametric

math.la.t.nonhomog.particular_plus_homog

1.6 Applications of Linear Systems

1.7 Linear Independence

Linear independence is defined, followed by a worked example of 3 vectors in R^3.

Determine if a particular set of vectors in R^3 in linearly independent math.la.e.vec.linindep.r3

Definition of linearly independent set of vectors: if a linear combination is zero, then every coefficient is zero, coordinate vector space. math.la.d.vec.linindep.coord

- Created On

- February 15th, 2017

- 7 years ago

- Views

- 3

- Type

- Video

- Timeframe

- Pre-class

- Perspective

- Introduction

- Language

- English

- Content Type

- text/html; charset=utf-8

Linear independence in-class activity

Definition of linearly independent set of vectors: if a linear combination is zero, then every coefficient is zero, coordinate vector space. math.la.d.vec.linindep.coord

If a set of vectors in R^n (or C^n) contains more than n elements, then the set is linearly dependent. math.la.t.vec.lindep.more.rncn

If a set of vectors contains the zero vector, then the set is linearly dependent. math.la.t.vec.lindep.zero

Theorem: a set of vectors is linearly dependent if and only if one of the vectors can be written as a linear combination of the other vectors, coordinate vector space. math.la.t.vec.lindep.coord

Definition of linear dependence relation math.la.d.vec.lindep.relation

- License

- GFDL-1.3

- Created On

- June 8th, 2017

- 7 years ago

- Views

- 3

- Type

- Handout

- Timeframe

- In-class

- Perspective

- Example

- Language

- English

- Content Type

- application/pdf

Video Lesson from University of Waterloo.

Definition of linearly independent set of vectors: if a linear combination is zero, then every coefficient is zero, coordinate vector space. math.la.d.vec.linindep.coord

Determine if a particular set of vectors in R^3 in linearly independent math.la.e.vec.linindep.r3

Definition of linear dependence relation math.la.d.vec.lindep.relation

Theorem: a set of vectors is linearly dependent if and only if one of the vectors can be written as a linear combination of the other vectors, coordinate vector space. math.la.t.vec.lindep.coord

- Created On

- October 23rd, 2013

- 11 years ago

- Views

- 2

- Type

- Video

- Perspective

- Introduction

- Language

- English

- Content Type

- text/html;charset=UTF-8

Quiz from the University of Waterloo.

Definition of vector-scalar multiplication, coordinate vector space math.la.d.vec.scalar.mult.coord

Definition of vector sum/addition, coordinate vector space math.la.d.vec.sum.coord

Example of vector-scalar multiplication in R^2 math.la.e.vec.scalar.mult.r2

Example of linear combination of vectors in R^2 math.la.e.vec.lincomb.r2

A linear system is equivalent to a vector equation. math.la.t.linsys.vec

Determine if a particular vector is in the span of a set of vectors in R^3 math.la.e.vec.span.of.r3

Definition of linearly independent set of vectors: if a linear combination is zero, then every coefficient is zero, coordinate vector space. math.la.d.vec.linindep.coord

Determine if a particular set of vectors in R^3 in linearly independent math.la.e.vec.linindep.r3

Definition of linear dependence relation math.la.d.vec.lindep.relation

A set of two vectors is linearly dependent if and only if neither is a scalar multiple of the other. math.la.t.vec.lindep.two

Theorem: a set of vectors is linearly dependent if and only if one of the vectors can be written as a linear combination of the other vectors, coordinate vector space. math.la.t.vec.lindep.coord

- Created On

- October 23rd, 2013

- 11 years ago

- Views

- 4

- Type

- Unknown

- Timeframe

- Post-class

- Perspective

- Example

- Language

- English

- Content Type

- text/html;charset=UTF-8

Linear independence is one of the most fundamental conceptual ideas in linear algebra, along with the notion of a span. So this section, and the subsequent Section LDS, will explore this new idea.

Definition of linear dependence relation math.la.d.vec.lindep.relation

math.la.d.vec.lindep.relation.trivial

- License

- GFDL-1.2

- Submitted At

- September 11th, 2017

- 7 years ago

- Views

- 2

- Type

- Textbook

- Language

- English

- Content Type

- text/html

In any linearly dependent set there is always one vector that can be written as a linear combination of the others. This is the substance of the upcoming Theorem DLDS. Perhaps this will explain the use of the word “dependent.” In a linearly dependent set, at least one vector “depends” on the others (via a linear combination).

Theorem: a set of vectors is linearly dependent if and only if one of the vectors can be written as a linear combination of the other vectors, coordinate vector space. math.la.t.vec.lindep.coord

- License

- GFDL-1.2

- Submitted At

- September 11th, 2017

- 7 years ago

- Views

- 2

- Type

- Textbook

- Language

- English

- Content Type

- text/html

Linear independence is one of the most fundamental conceptual ideas in linear algebra, along with the notion of a span. So this section, and the subsequent Section LDS, will explore this new idea.

Equivalence theorem: the columns of A are linearly independent. math.la.t.equiv.col.linindep

- License

- GFDL-1.2

- Submitted At

- September 11th, 2017

- 7 years ago

- Views

- 3

- Type

- Textbook

- Language

- English

- Content Type

- text/html

Linear independence is one of the most fundamental conceptual ideas in linear algebra, along with the notion of a span. So this section, and the subsequent Section LDS, will explore this new idea.

Definition of linearly independent set of vectors: if a linear combination is zero, then every coefficient is zero, coordinate vector space. math.la.d.vec.linindep.coord

- License

- GFDL-1.2

- Submitted At

- September 11th, 2017

- 7 years ago

- Views

- 2

- Type

- Textbook

- Language

- English

- Content Type

- text/html

Linear independence is one of the most fundamental conceptual ideas in linear algebra, along with the notion of a span. So this section, and the subsequent Section LDS, will explore this new idea.

If a set of vectors in R^n (or C^n) contains more than n elements, then the set is linearly dependent. math.la.t.vec.lindep.more.rncn

- License

- GFDL-1.2

- Submitted At

- September 11th, 2017

- 7 years ago

- Views

- 2

- Type

- Textbook

- Language

- English

- Content Type

- text/html

Equivalence theorem: the equation Ax=0 has only the trivial solution. math.la.t.equiv.mat.eqn.homog

math.la.p.vec.lindep.more.rncn

math.la.p.vec.lindep.zero

math.la.p.vec.lindep.coord

1.8 Introduction to Linear Transformations

Linear transformations are defined, and some small examples (and non examples) are explored. (need tag for R^2 -> R^2 example, general)

Definition of linear transformation, coordinate vector space math.la.d.lintrans.coord

- Created On

- February 15th, 2017

- 7 years ago

- Views

- 3

- Type

- Video

- Timeframe

- Pre-class

- Perspective

- Introduction

- Language

- English

- Content Type

- text/html; charset=utf-8

Two proofs, with discussion, of the fact that an abstract linear transformation maps 0 to 0.

A linear transformation maps zero to zero. math.la.t.lintrans.z

Definition of linear transformation, arbitrary vector space math.la.d.lintrans.arb

- Created On

- February 15th, 2017

- 7 years ago

- Views

- 2

- Type

- Handout

- Timeframe

- Pre-class

- Perspective

- Proof

- Language

- English

- Content Type

- text/html; charset=utf-8

Examples of special types of linear transformation from R^2 to R^2: dilation, projection, and shear. (Some issues with the video: things re-start around the 10 second mark, and at 3:46 the word "projection" is said, when it should be "transformation". Also, at the end maybe it could be described why it is called a 'shear'.)

Geometric properties of linear transformations on R^2 math.la.c.lintrans.geometric.r2

Example of a linear transformation on R^2: projection math.la.e.lintrans.projection.r2

Example of a linear transformation on R^2: shear math.la.e.lintrans.shear.r2

- Created On

- February 15th, 2017

- 7 years ago

- Views

- 3

- Type

- Video

- Timeframe

- Post-class

- Perspective

- Example

- Language

- English

- Content Type

- text/html; charset=utf-8

After watching a video defining linear transformations and giving examples of 2-D transformations, students should be able to answer the questions in this quiz.

Example of a linear transformation on R^2: projection math.la.e.lintrans.projection.r2

Matrix-vector multiplication is a linear transformation. math.la.t.mat.vec.mult.lintrans

A linear transformation is given by a matrix whose columns are the images of the standard basis vectors, coordinate setting. math.la.t.lintrans.mat.basis.standard.coord

- Created On

- June 8th, 2017

- 7 years ago

- Views

- 2

- Type

- Handout

- Timeframe

- Pre-class

- Language

- English

- Content Type

- text/html; charset=utf-8

Use matrix transformations to motivate the concept of linear transformation; examples of matrix transformations

Matrices act as a transformations by multiplying vectors math.la.c.transformation.matrix

Definition of linear transformation, coordinate vector space math.la.d.lintrans.coord

Matrix-vector multiplication is a linear transformation. math.la.t.mat.vec.mult.lintrans

Example of a linear transformation on R^2: shear math.la.e.lintrans.shear.r2

Example of a linear transformation on R^2: projection math.la.e.lintrans.projection.r2

- Created On

- August 25th, 2017

- 7 years ago

- Views

- 2

- Type

- Video

- Language

- English

- Content Type

- text/html; charset=utf-8

Motivation of the definition of a linear transformation using properties of matrices; examples; geometric intuition; matrix representation of a linear transformation

Definition of linear transformation, coordinate vector space math.la.d.lintrans.coord

Geometric properties of linear transformations math.la.c.lintrans.geometric

Geometric properties of linear transformations on R^2 math.la.c.lintrans.geometric.r2

Definition of matrix representation of a linear transformation, coordinate vector space math.la.d.lintrans.mat.repn.coord

Non-example of a linear transformation math.la.e.lintrans.not

Example of a linear transformation on R^2: shear math.la.e.lintrans.shear.r2

- Created On

- September 3rd, 2017

- 7 years ago

- Views

- 3

- Type

- Video

- Language

- English

- Content Type

- text/html; charset=utf-8

Early in Chapter VS we prefaced the definition of a vector space with the comment that it was “one of the two most important definitions in the entire course.” Here comes the other. Any capsule summary of linear algebra would have to describe the subject as the interplay of linear transformations and vector spaces. Here we go.

A linear transformation maps zero to zero. math.la.t.lintrans.z

- License

- GFDL-1.2

- Submitted At

- September 11th, 2017

- 7 years ago

- Views

- 2

- Type

- Textbook

- Language

- English

- Content Type

- text/html

Early in Chapter VS we prefaced the definition of a vector space with the comment that it was “one of the two most important definitions in the entire course.” Here comes the other. Any capsule summary of linear algebra would have to describe the subject as the interplay of linear transformations and vector spaces. Here we go.

A linear transformation of a linear combination is the linear combination of the linear transformation math.la.t.lintrans.lincomb

- License

- GFDL-1.2

- Submitted At

- September 11th, 2017

- 7 years ago

- Views

- 2

- Type

- Textbook

- Language

- English

- Content Type

- text/html

Early in Chapter VS we prefaced the definition of a vector space with the comment that it was “one of the two most important definitions in the entire course.” Here comes the other. Any capsule summary of linear algebra would have to describe the subject as the interplay of linear transformations and vector spaces. Here we go.

Matrices act as a transformations by multiplying vectors math.la.c.transformation.matrix

- License

- GFDL-1.2

- Submitted At

- September 11th, 2017

- 7 years ago

- Views

- 3

- Type

- Textbook

- Language

- English

- Content Type

- text/html

math.functions.d.transformation

math.functions.d.transformation.domain

math.functions.d.transformation.codomain

math.functions.d.transformation.image

math.functions.d.transformation.range

Example of a linear transformation on R^2: rotation math.la.e.lintrans.rotation.r2

Example of a linear transformation on R^3: rotation math.la.e.lintrans.rotation.r3

1.9 The Matrix of a Linear Transformation

Examples of special types of linear transformation from R^2 to R^2: dilation, projection, and shear. (Some issues with the video: things re-start around the 10 second mark, and at 3:46 the word "projection" is said, when it should be "transformation". Also, at the end maybe it could be described why it is called a 'shear'.)

Geometric properties of linear transformations on R^2 math.la.c.lintrans.geometric.r2

Example of a linear transformation on R^2: projection math.la.e.lintrans.projection.r2

Example of a linear transformation on R^2: shear math.la.e.lintrans.shear.r2

- Created On

- February 15th, 2017

- 7 years ago

- Views

- 3

- Type

- Video

- Timeframe

- Post-class

- Perspective

- Example

- Language

- English

- Content Type

- text/html; charset=utf-8

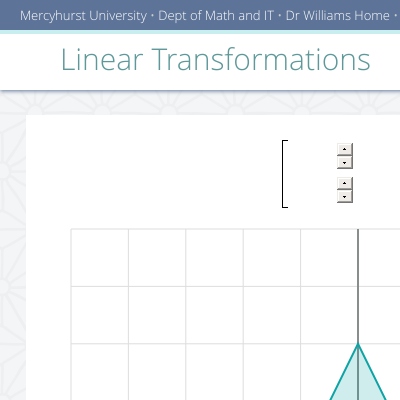

Visualize 2-d linear transformations by looking at the image of geometric object. (Need topic: Visualize a linear transformation on R^2 by its effect on a region.)

Geometric properties of linear transformations on R^2 math.la.c.lintrans.geometric.r2

- Created On

- February 15th, 2017

- 7 years ago

- Views

- 2

- Type

- Applet

- Timeframe

- Review

- Perspective

- Example

- Language

- English

- Content Type

- text/html; charset=UTF-8

After watching a video defining linear transformations and giving examples of 2-D transformations, students should be able to answer the questions in this quiz.

Example of a linear transformation on R^2: projection math.la.e.lintrans.projection.r2

Matrix-vector multiplication is a linear transformation. math.la.t.mat.vec.mult.lintrans

A linear transformation is given by a matrix whose columns are the images of the standard basis vectors, coordinate setting. math.la.t.lintrans.mat.basis.standard.coord

- Created On

- June 8th, 2017

- 7 years ago

- Views

- 2

- Type

- Handout

- Timeframe

- Pre-class

- Language

- English

- Content Type

- text/html; charset=utf-8

In-class activity to be completed after an introduction to transformations and ideally in teams. In part 1, students are guided to discover the theorem describing the matrix of a linear transformation from R^n to R^m. In part 2, students learn the one-to-one and onto properties of linear transformations, and are asked to relate these properties to the properties of the matrices (linear independence of columns and columns spanning the codomain).

Definition of the standard matrix for a linear transformation, coordinate setting math.la.d.lintrans.mat.basis.standard.coord

- Created On

- June 8th, 2017

- 7 years ago

- Views

- 2

- Type

- Handout

- Timeframe

- In-class

- Language

- English

- Content Type

- text/html; charset=utf-8

Motivation of the definition of a linear transformation using properties of matrices; examples; geometric intuition; matrix representation of a linear transformation

Definition of linear transformation, coordinate vector space math.la.d.lintrans.coord

Geometric properties of linear transformations math.la.c.lintrans.geometric

Geometric properties of linear transformations on R^2 math.la.c.lintrans.geometric.r2

Definition of matrix representation of a linear transformation, coordinate vector space math.la.d.lintrans.mat.repn.coord

Non-example of a linear transformation math.la.e.lintrans.not

Example of a linear transformation on R^2: shear math.la.e.lintrans.shear.r2

- Created On

- September 3rd, 2017

- 7 years ago

- Views

- 3

- Type

- Video

- Language

- English

- Content Type

- text/html; charset=utf-8

Early in Chapter VS we prefaced the definition of a vector space with the comment that it was “one of the two most important definitions in the entire course.” Here comes the other. Any capsule summary of linear algebra would have to describe the subject as the interplay of linear transformations and vector spaces. Here we go.

A linear transformation is given by a matrix whose columns are the images of the standard basis vectors, coordinate setting. math.la.t.lintrans.mat.basis.standard.coord

- License

- GFDL-1.2

- Submitted At

- September 11th, 2017

- 7 years ago

- Views

- 3

- Type

- Textbook

- Language

- English

- Content Type

- text/html

Matrix describing a rotation of the plane math.la.t.mat.rotation

math.la.math.functions.d.mapping.onto

math.la.math.functions.d.mapping.onetoone

math.la.t.lintrans.onetoone.ker

math.la.t.lintrans.onto.span

math.la.t.lintrans.onetoone.linindep

1.10 Linear Models in Business, Science, and Engineering

Supplementary Exercises

2 Matrix Algebra

2.1 Matrix Operations

Motivation and definition of the inverse of a matrix

Definition of matrix inverse math.la.d.mat.inv

Definition of identity matrix math.la.d.mat.identity

Matrix inverses are unique: if A and B are square matrices, then AB=I implies that A=B^-1 and B=A^-1. math.la.t.mat.inv.unique

- License

- (CC-BY-NC-SA-4.0 OR CC-BY-SA-4.0)

- Created On

- January 5th, 2017

- 8 years ago

- Views

- 3

- Type

- Video

- Timeframe

- Pre-class

- Perspective

- Introduction

- Language

- English

- Content Type

- text/html; charset=utf-8

The product of a matrix times a vector is defined, and used to show that a system of linear equations is equivalent to a system of linear equations involving matrices and vectors. The example uses a 2x3 system.

Definition of matrix-scalar multiplication math.la.d.mat.scalar.mult

A linear system is equivalent to a vector equation. math.la.t.linsys.vec

A matrix equation is equivalent to a linear system math.la.t.mat.eqn.linsys

- License

- CC-BY-SA-4.0

- Created On

- February 15th, 2017

- 7 years ago

- Views

- 3

- Type

- Video

- Timeframe

- Pre-class

- Perspective

- Introduction

- Language

- English

- Content Type

- text/html; charset=utf-8

Notation for matrix entries, diagonal matrix, square matrix, identity matrix, and zero matrix.

Notation for entry of matrix math.la.d.mat.entry

Definition of m by n matrix math.la.d.mat.m_by_n

Definition of diagonal matrix math.la.d.mat.diagonal

Definition of identity matrix math.la.d.mat.identity

Definition of zero matrix math.la.d.mat.zero

- Created On

- February 17th, 2017

- 7 years ago

- Views

- 3

- Type

- Video

- Timeframe

- Pre-class

- Perspective

- Introduction

- Language

- English

- Content Type

- text/html; charset=utf-8

Definition of sum of matrices, product of a scalar and a matrix

Definition of sum of matrices math.la.d.mat.sum

Definition of matrix-scalar multiplication math.la.d.mat.scalar.mult

Matrix addition is commutative and associative. math.la.t.mat.add.commut_assoc

Matrix-scalar multiplication is commutative, associative, and distributive. math.la.t.mat.scalar.mult.commut_assoc

- Created On

- February 17th, 2017

- 7 years ago

- Views

- 3

- Type

- Video

- Timeframe

- Pre-class

- Perspective

- Introduction

- Language

- English

- Content Type

- text/html; charset=utf-8

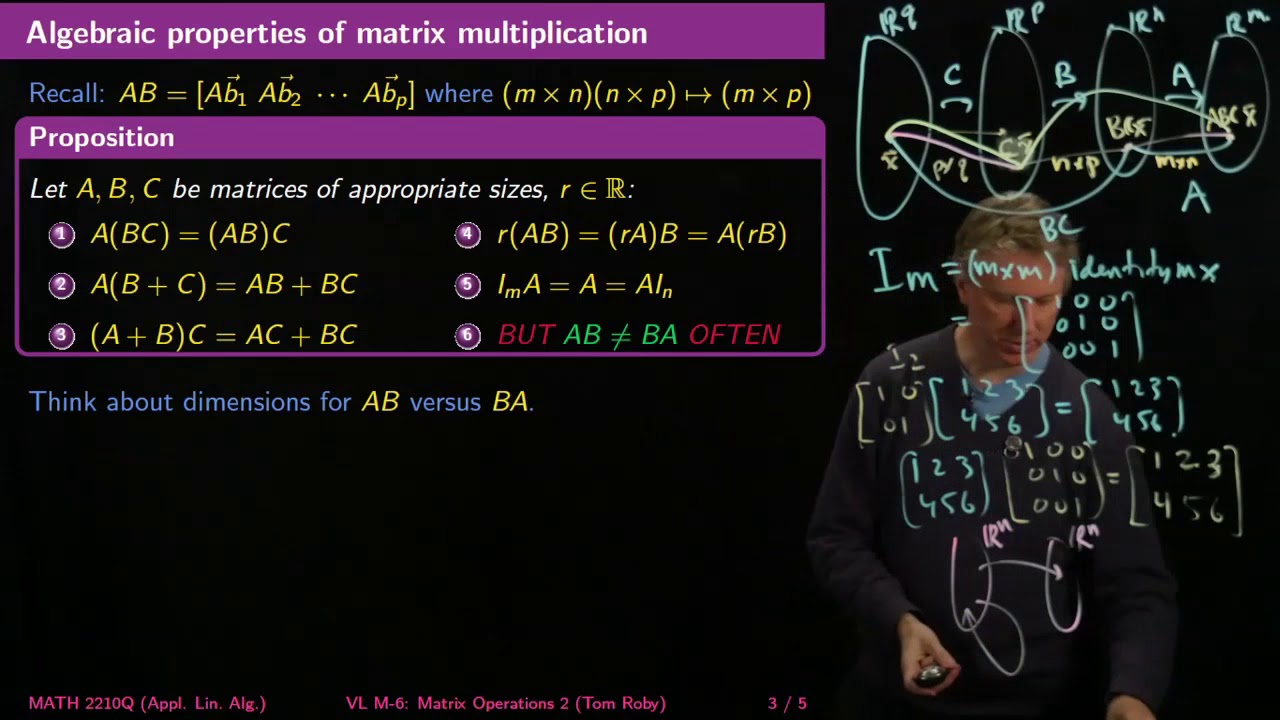

Learning goals: 1. What are the dimension (size) requirements for two matrices so that they can be multiplied to each other? 2. What is the product of two matrices, when it exists?

Definition of matrix multiplication in terms of column vectors math.la.d.mat.mult.col

Definition of matrix-vector product, as a linear combination of column vectors math.la.d.mat.vec.prod

- Created On

- February 17th, 2017

- 7 years ago

- Views

- 2

- Type

- Video

- Timeframe

- Review

- Language

- English

- Content Type

- text/html; charset=utf-8

A 2x2 example is used to show that AB does not always equal BA.

Matrix multiplication is not commutative in general. math.la.c.mat.mult.commut

Example of multiplying 2x2 matrices math.la.e.mat.mult.2x2

- Created On

- February 17th, 2017

- 7 years ago

- Views

- 3

- Type

- Video

- Timeframe

- Review

- Language

- English

- Content Type

- text/html; charset=utf-8

Example 3x2 and a 2x3 matrices are used to show that AB does not always equal BA

Matrix multiplication is not commutative in general. math.la.c.mat.mult.commut

Example of multiplying nonsquare matrices math.la.e.mat.mult.nonsquare

- Created On

- February 17th, 2017

- 7 years ago

- Views

- 3

- Type

- Video

- Timeframe

- Post-class

- Perspective

- Example

- Language

- English

- Content Type

- text/html; charset=utf-8

The transpose of a matrix is defined, and various properties are explored using numerical examples.

Definition of transpose of a matrix math.la.d.mat.transpose

The transpose of a product of matrices is the product of the transposes in reverse order. math.la.t.mat.mult.transpose

Matrix transpose is an involution. math.la.t.mat.transpose.involution

- Created On

- February 17th, 2017

- 7 years ago

- Views

- 2

- Type

- Video

- Timeframe

- Pre-class

- Perspective

- Introduction

- Language

- English

- Content Type

- text/html; charset=utf-8

Suggestions for in-class activities on matrix operations: addition, multiplication, transpose, and the fact that multiplication is not commutative.

Definition of sum of matrices math.la.d.mat.sum

Matrix multiplication is not commutative in general. math.la.c.mat.mult.commut

For matrices, AB=AC does not imply B=C in general. math.la.c.mat.mult.cancellation

- Created On

- February 17th, 2017

- 7 years ago

- Views

- 2

- Type

- Handout

- Timeframe

- In-class

- Language

- English

- Content Type

- text/html; charset=utf-8

The definition of matrix inverse is motivated by considering multiplicative inverse. The identity matrix and matrix inverse are defined.

Definition of matrix inverse math.la.d.mat.inv

Definition of identity matrix math.la.d.mat.identity

The identity matrix is the identity for matrix multiplication. math.la.t.mat.mult.identity

- Created On

- February 17th, 2017

- 7 years ago

- Views

- 2

- Type

- Video

- Timeframe

- Pre-class

- Perspective

- Introduction

- Language

- English

- Content Type

- text/html; charset=utf-8

Associative and distributive properties of matrix multiplication and addition; multiplication by the identity matrix; definition of the transpose of a matrix; transpose of the transpose, transpose of a sum, transpose of a product

Matrix-scalar multiplication is commutative, associative, and distributive. math.la.t.mat.scalar.mult.commut_assoc